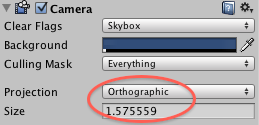

Continuing our series on 2D Game Development in Unity3D we’ll take a look at the final method we’ve utilized so far. When the use of a GUI Class or Sprite Manager System doesn’t cut it, you might want to look at the game through another camera angle, thus using ortographic projection.

Unity3D

2D Game Prototyping in Unity3D: Sprite Manager Systems

2D Game Prototyping in Unity3D: Sprite Manager Systems

The simplest way to create 2D games in Unity3D might not always be the best way. In this post we take a look at the possibilities and drawbacks of Sprite Manager Systems and compare them to simply using the GUI Class.

2D Game Prototyping in Unity3D Using the GUI Class

2D Game Prototyping in Unity3D Using the GUI Class

For many of our prototypes we’re using Unity3D. Even when the gameplay is actually 2D. There are several different ways to create 2D games and prototypes in Unity3D. We’ll go over them in the coming weeks and are starting out easy: using the GUI class.

Unity Roadmap 2011: Three Features we’re Most Wild About

Unity Roadmap 2011: Three Features we’re Most Wild About

As we’re working with Unity3D a lot, we’re closely following the company’s every move. They just published a roadmap of short term adjustments they will add and we’ve listed the three features that we want to see most.

Ürban Pad

Ürban Pad

We’re constantly on the lookout for new ways of quickly building environments to use in our prototypes. Ürban Pad prides itself on being one of the fastest ways to rapidly create a 3D city. Let’s find out if reality can match their claims.

Unity and PlayMaker: The best of both worlds

Unity and PlayMaker: The best of both worlds

In a previous post we talked about the severe limitations of Playmaker but since we’re quite charmed with the possibilities of the technology, we devised a workaround approach that enables us to profit from the best aspects of both Unity3D and Playmaker.

Research: PlayMaker

Research: PlayMaker

To state that there are a lot of plug-ins available for Unity3D would be quite the understatement. As is to be expected, not everything is useful to a prototyping company so we do a lot of research to look for the stuff that’s right up our alley. On today’s menu: Playmaker.

Writing PlayerPrefs, Fast

Writing PlayerPrefs, Fast

The massive amount of feedback on our previous blog post about saving data in a platform-independent way in Unity3D made motivated us to talk about this topic some more. There’s another way to do this and for those planning to process a lot of data, we’ve even made some improvements of our own.

File I/O in Unity3D

File I/O in Unity3D

It only seems apt to share some programming tips and tricks from time to time. At the moment, there is no platform-independent way to save data in Unity3D. If you want to open a file for reading or writing, you need to use the proper path. Here’s how to do this.